Our data forms an invisible trail in our digital age, constantly collected, used, and shared. This information fuels innovation and convenience but also raises critical moral questions. Let’s explore this article as we delve into the ethical complexities of data privacy. We’ll examine the moral issues surrounding data collection, use, and sharing and shed light on the growing role of personal data in society. Let’s explore how data privacy evaluates moral problems related to data and ensures responsible data practices moving forward.

How does data privacy evaluate moral problems related to data?

While data offers undeniable benefits, moral concerns arise when it’s misused:

Discrimination and prejudice based on data: Algorithms analyzing our data can perpetuate societal biases. Loan applications are denied, job opportunities are missed, or even insurance rates are higher—all because of data reflecting historical prejudices, not individual merit. This raises a crucial question: Should algorithms be held accountable for perpetuating discrimination?

Monitor and track violations: With ever-increasing data collection, the line between security and privacy blurs. Persistent surveillance may suppress freedom of speech and movement. Finding the balance between legitimate surveillance to prevent harm and unwarranted intrusion into our lives is a complex moral challenge.

Abuse of personal data: Data breaches and unauthorized access threaten the security of our personal information. Imagine your financial details, health records, or even private messages leaked online. This prompts the inquiry: who is accountable for protecting our data, and what options are available if it is misappropriated?

Lack of transparency and control for users: Often, we need more understanding of how our data is collected, used, or shared. Consent forms can be lengthy and confusing, making it difficult to know exactly what we agree to. The lack of transparency and control over our personal information raises a fundamental moral issue: who truly owns our data in the digital age?

The Role of Data privacy evaluates moral problems related to data.

While the moral issues surrounding data are complex, data privacy offers a potential solution. Let’s explore how:

Data privacy is a potential solution to moral issues. Strong data privacy practices can help mitigate the ethical problems we’ve discussed. By restricting data collection, promoting transparency in its usage, and granting users control, data privacy can help decrease discrimination, prevent data misuse, and give individuals a renewed sense of empowerment.

Moral principles of data privacy

Data privacy is built on core moral principles. These include:

- Individual Autonomy: The right to manage your personal information.

- Fairness and non-discrimination: Data practices should not unfairly disadvantage individuals.

- Transparency and accountability: Organizations should be transparent about collecting and using data and be held responsible for breaches.

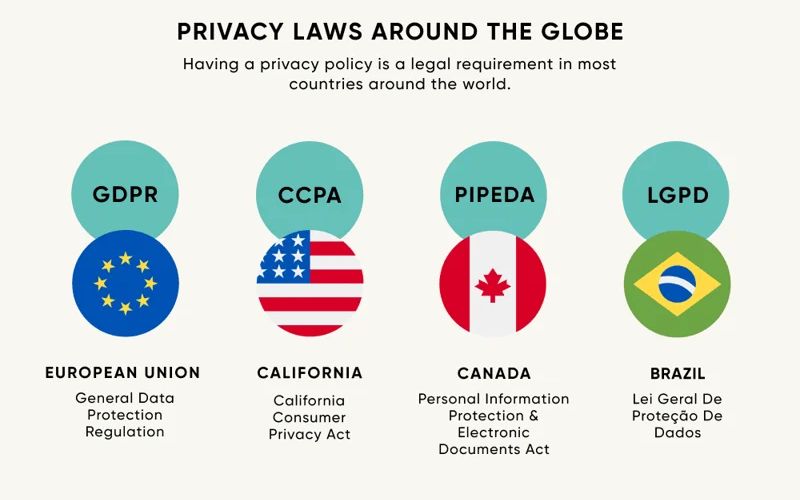

Data Privacy Laws and Regulations

Numerous countries are implementing laws and regulations to uphold these ethical standards. These laws may allow users to access and correct their data, restrict its use, and even request deletion. Understanding these legal frameworks empowers individuals to hold organizations accountable for responsible data practices.

How does data privacy evaluate moral problems related to data?

Data privacy evaluates moral problems related to data by considering the ethical implications of data use and ensuring that individual privacy and rights are maintained. This involves several fundamental principles:

- Consent: Ensuring that individuals have given informed and explicit consent for their data to be collected, stored, and used.

- Transparency: Providing clear and accessible information about data collection and usage methods to ensure individuals understand how their data will be used and can express informed consent.

- Accountability: Holding organizations accountable for any lapses in data security and ensuring they are legally and ethically responsible for protecting sensitive information.

- Limiting Damage: Implementing encryption and firewalls to minimize the potential harm caused by data breaches or unauthorized access.

- Addressing Biases: Regularly examine algorithms and models for bias and resolve any concerns to ensure equitable decision-making.

- Promoting Equitable Opportunities: Avoiding discriminatory behaviors and ensuring data-driven choices do not exacerbate inequities.

- Regulatory Compliance: Adhering to data protection regulations and laws, which vary globally, to ensure uniform and comprehensive data ethics standards.

By considering these principles, data privacy addresses moral problems related to data by balancing individual rights with the interests of businesses and society, ultimately fostering trust and responsible data practices

Moral data assessment: Methods and limitations

As we strive for a more moral data landscape, the question becomes: how do we assess the morality of data practices?

Existing methods for moral data assessment

One prevalent method is the adoption of moral frameworks and guidelines. These frameworks offer a set of principles, such as fairness, accountability, transparency, and respect for privacy, to guide the evaluation of data practices, considering how data privacy evaluates moral problems related to data.

Another approach is stakeholder engagement, which involves consulting with diverse groups impacted by data practices to gather various perspectives and values.

Additionally, impact assessments, including moral and social impact assessments, are utilized to systematically explore the potential effects of data projects on individuals and communities. Techniques like algorithmic audits and fairness metrics are also employed to identify and mitigate biases within data and algorithms, addressing how data privacy evaluates moral data-related problems.

Challenges with Current Approaches

A significant challenge is the abstract nature of moral principles, which complicates their operationalization and consistent application in tangible scenarios. This ambiguity can lead to diverse interpretations and implementations, potentially diluting the effectiveness of ethical guidelines.

Moreover, stakeholder engagement can be hindered by unequal power dynamics and issues of representation, leading to the marginalization of certain voices. Impact assessments, while valuable, often depend on predictions and assumptions that may only partially account for the long-term and complex impacts of data practices.

Algorithmic audits and fairness metrics, despite their utility, face technical challenges and may not address all forms of bias or moral concerns. They focus instead on quantifiable aspects that might overlook broader ethical issues.

The need for more comprehensive and effective assessment methods

There is an increasing awareness that moral considerations in data practices demand the application of principles and the development of robust methodologies capable of adapting to changing technologies and societal values. This includes crafting more nuanced and flexible moral frameworks tailored to specific contexts and technologies. Data privacy evaluates moral data-related problems, emphasizing improving stakeholder engagement processes to ensure more inclusive and equitable participation.

Moreover, there is a call for innovative tools and techniques that can offer deeper insights into the moral implications of data practices. This encompasses advanced analytics for detecting biases and participatory methods for impact evaluation. Addressing these needs will require a concerted effort across disciplines, sectors, and communities to navigate the moral complexities of the digital era effectively.

Solutions to moral issues related to data

The moral challenges around data are significant, but there are solutions. Let’s explore ways to promote more responsible data use.

Strategies to improve ethical practices in data usage

- Technical solutions: Implementing data minimization practices, anonymization techniques, and secure storage can minimize risks.

- Policy solutions: Strengthening data privacy laws, requiring transparency in data use, and establishing clear user rights can create a more moral framework.

- Algorithmic fairness: Develop and deploy unbiased algorithms that do not perpetuate discrimination.

The responsibilities of different stakeholders

- Government: Enact strong data privacy laws, enforce regulations, and promote research in moral data practices.

- Businesses: Commit to data minimization, implement robust security measures, and offer users clear and transparent data practices.

- Individuals should educate themselves about data privacy, understand their rights, and make informed choices about sharing their data.

The significance of promoting awareness of ethical data use issues.

Public awareness is crucial. Educating individuals and businesses about the moral implications of data practices can foster a collective responsibility for building a more moral data landscape. This could involve educational campaigns, promoting data literacy, and encouraging open dialogue about the moral use of data. Additionally, data privacy evaluates moral problems related to data, highlighting the ethical dimensions and promoting discussions on how data should be handled responsibly.

Government’s role in promoting data moralities

- Establishing Regulatory Frameworks: Governments implement legal standards for ethical data use through regulatory frameworks, ensuring that practices adhere to principles such as privacy and fairness. This compels organizations to adopt moral data handling, safeguarding individual rights.

- Funding research and development: By investing in research on ethical AI and data practices, governments facilitate the development of technologies that are transparent, fair, and accountable. This helps to reduce biases in data usage.

- Promoting public awareness and education: Governments play a crucial role in educating the public about data moralities, including the importance of data privacy and the moral implications of AI. This enables individuals to make knowledgeable choices and allows professionals to integrate ethical considerations into their work.

- Facilitating multi-stakeholder dialogues: Governments can act as mediators, bringing together tech companies, civil society, and academia to discuss data moralities, ensuring diverse perspectives shape moral standards and policies.

Corporate responsibility for ethical data usage

Businesses are held to immense responsibility for using data morally. They are entrusted with vast amounts of our personal information, and how they handle it profoundly impacts individuals and society.

- Transparency and user control: Businesses must be transparent about collecting, using, and sharing user data. Users should have easy-to-understand options to control what data is collected and its use. This involves implementing straightforward opt-in and opt-out options for data sharing.

- Security and minimization: Robust security measures are essential to protect user data from breaches and unauthorized access. Additionally, businesses should collect only the data necessary for legitimate purposes and avoid unnecessary data hoarding.

- Fairness and non-discrimination: Algorithms and data-driven decision-making processes should be free from biases that could unfairly disadvantage individuals. Businesses should actively work to ensure their data practices promote fairness and equal opportunity.

- Accountability and user rights: Businesses are accountable for responsible data stewardship. They should empower users to access and rectify inaccurate data and request deletion following relevant regulations.

- Respecting privacy: Data privacy is a fundamental right. Businesses must respect user privacy by obtaining informed consent for data collection and avoiding practices like intrusive surveillance.

Why is it essential to focus on different moral dimensions in data ethics?

It is essential to focus on different moral dimensions in data ethics because data ethics encompasses a broader range of ethical concerns beyond just information. Data ethics involves considering the moral implications of data collection, storage, processing, dissemination, sharing, and use and the algorithms and practices involved.

Key Moral Dimensions

- Data Privacy: Ensuring that individual privacy and rights are maintained, including the right to access, correct, or delete personal data.

- Transparency and Accountability: Being transparent about data collection practices, usage, and sharing, taking responsibility for data practices, acknowledging mistakes, and taking corrective action.

- Fair Decision Making: Ensuring that data-driven decision-making processes are fair and unbiased, avoiding perpetuating biases or unfair practices.

- User Autonomy and Rights: Respecting user rights, giving users control over their data, respecting their choices, and not manipulating them using data-driven insights.

- Innovation Responsibility: Developing and deploying data-driven technologies responsibly, considering the broader implications of these technologies on human rights and societal values.

- Data Security and Protection: Safeguarding sensitive information against breaches, unauthorized access, or misuse and ensuring data protection are not just about avoiding legal consequences but also respecting users’ trust in organizations.

- Economic and Social Value: Ensuring data use leads to financial benefits and addresses significant societal challenges without compromising individual rights or public trust.

- Long-term Perspective: Considering the long-term implications of data use, ensuring that short-term gains do not lead to long-term harm, and fostering sustainability and resilience in data practices.

Importance of Focusing on Different Moral Dimensions

Focusing on these different moral dimensions is crucial because it ensures that data ethics addresses the diverse ethical implications of data science within a consistent, holistic, and inclusive framework. This approach helps to avoid narrow, ad hoc approaches and promotes morally good solutions that balance individual rights with the interests of businesses and society

What are the critical considerations regarding responsible innovation and data privacy?

Key considerations regarding responsible innovation and data privacy include:

Responsible Innovation

- Privacy by Design: Incorporating privacy considerations throughout the development life cycle for any AI system, including incorporating privacy-enhancing technologies, anonymizing data, and adopting robust security measures.

- Transparency and Consent: Clear communication and consent mechanisms should empower individuals to make informed decisions about their data. Organizations should advocate for standardized privacy policies and provide individuals with understandable and accessible information regarding how their data is collected, processed, and used.

- Data Protection and Governance: Implementing robust data governance plans to ensure data is managed effectively throughout its life cycle, including regular testing by a qualified human.

- Ethical Frameworks and Regulatory Standards: Establishing enforceable regulations will help safeguard individuals’ privacy rights, curbing potential abuses of AI technologies. Business leaders can play a pivotal role in facilitating the development of these regulations.

- Data Management and Minimization: Ensuring data is managed effectively and minimized to prevent breaches and maintain privacy.

Data Privacy

- Data Masking and Authorization Management: Protecting data subjects by using data masking and authorization management within the organization.

- Differential Privacy: Using advanced anonymization technologies like differential privacy to gain insights from data without compromising user anonymity.

- Private Join and Compute: This allows organizations to accurately compute and draw valuable insights from aggregate statistics while keeping individual data safe.

- Federated Learning: Training machine learning models on devices rather than in the cloud, preserving user privacy by keeping personal information on devices.

- Adversarial Training and Detection: Implement measures to detect and clean adversarial inputs and use adversarial training to mitigate external attacks.

- Data Localization and Access Management: Ensuring data is stored and processed locally and access is restricted to authorized personnel.

- Regular Audits and Testing: Conducting regular audits and testing to ensure adequate data protection and privacy measures.

- Data Protection Office Reviews: Ensuring automated decision-making and the underlying personal data are transparent and interpretable to data subjects.

These considerations ensure that data privacy and responsible innovation are integrated into developing and deploying AI systems, maintaining trust, and protecting individual rights.

Although data offers significant advantages, its ethical implications demand thorough scrutiny. Data privacy evaluates moral problems related to data and serves as a safeguard, ensuring responsible data practices and mitigating issues like discrimination and lack of user control. Creating a more ethical data landscape necessitates collaboration among governments, businesses, and individuals. By uniting and prioritizing data privacy, we can leverage data’s potential for good while safeguarding our fundamental rights in the digital era. For additional insights, visit the website Proxy Rotating.

>> See more:

Data privacy management software